VMWorld Europe 2017 started today in the classic congress venue of Barcelona.

As always, VMware participates with a number of announcements and new products: we will cover the most exciting news.

New VMware HCX technologies for portability and multi-cloud and multi-site integration are now available

VMware HCX is a technology that enables secure, fast and protected mobility and interoperability between different clouds, thus allowing the migration of big-sized applications without downtime. Being a new solution, it will be initially available on IMB and OVH platforms only, and accessible by means of VMware Cloud Providers.

Read more ...

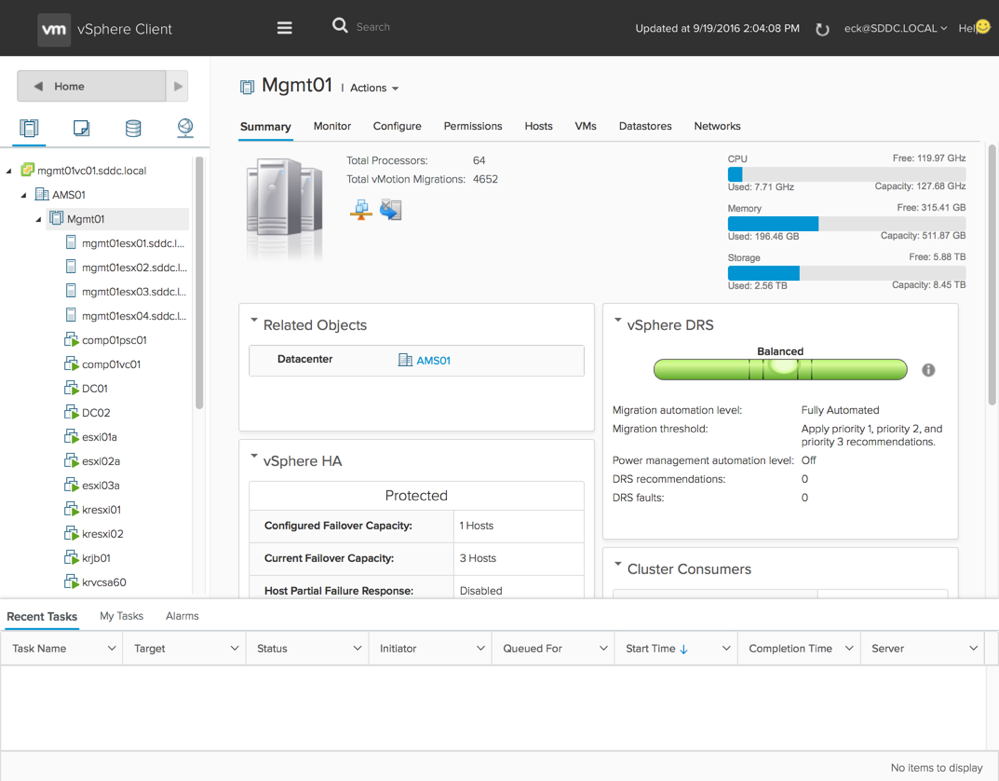

Today VMware announced vSphere 6.5 at VMworld: let’s see what are the new features of a release that finally brings important improvements.

VCSA

vCenter Server Appliance now has a installer with a new appearance and can be used from Linux and Mac too, and news about VCAS are not over yet.

Read more ...

VMworld 2016, the biggest and most important event about the VMware world, is going on these days in Las Vegas. This year’s motto is “be_TOMORROW”.

Among the most interesting topics introduced so far there’s the new VMware Cloud Foundation platform, a new architecture for the creation of cloud infrastructures. The new Cross-Cloud Architecture (still in Tech Preview) has been presented with vCloud Availability and vCloud Air Hybrid Cloud Manager. There are news also about a better integration between containers and the vSphere environment.

Read more ...

Previous article -> An introduction to Docker pt.2

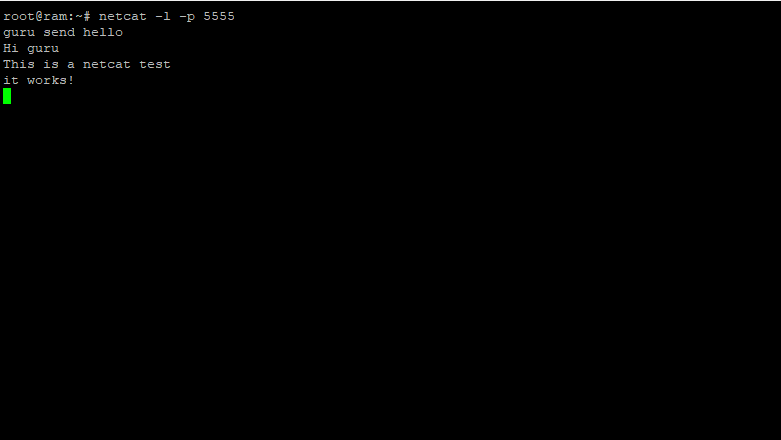

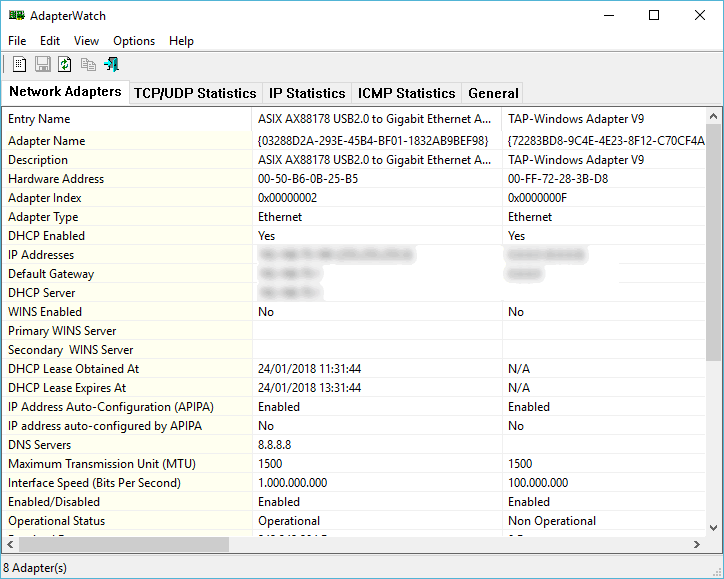

The Docker introduction series continues with a new article dedicated to two fundamental elements of a containers ecosystem: volumes and connectivity.

That is, how to let two containers communicate with each others and how to manage data on a certain folder on the host.

Storage: volumes and bind-mounts

Files created within a container are stored on a layer that can be written by the container itself with some significant consequences:

- data don’t survive a reboot or the destruction of the container.

- data can hardly be brought outside the container if used by processes.

- the aforementioned layer is strictly tied to the host where the container runs, and it can’t be moved between hosts.

- this layer requires a dedicated driver which as an impact on performances.

Docker addresses these problems by allowing containers to perform I/O operations directly on the host with volumes and bind-mounts.

Read more ...

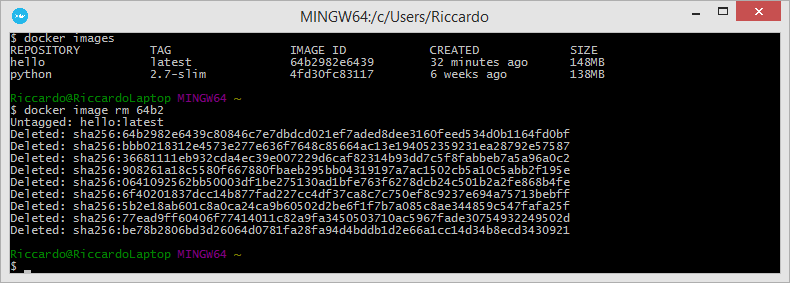

Previous article -> Introduction to Docker - pt.1

Images and Containers

An image is an ordered set of root filesystem updates and related execution parameters to be used in a container runtime; it has no state and is immutable.

A typical image has a limited size, doesn’t require any external dependency and includes all runtimes, libraries, environmental variables, configuration files, scripts and everything needed to run the application.

A container is the runtime instance of an image, that is, what the image actually is in memory when is run. Generally a container is completely independent from the underlying host, but an access to files and networks can be set in order to permit a communication with other containers or the host.

Conceptually, an image is the general idea, a container is the actual realization of that idea. One of the points of strength of Docker is the capability of creating minimal, light and complete images that can be ported on different operating systems and platforms: the execution of the related container will always be feasible and possible thus avoiding any problem related to the compatibility of packages, dependencies, libraries, and so forth. What the container needs is already included in the image, and the image is always portable indeed.

Read more ...

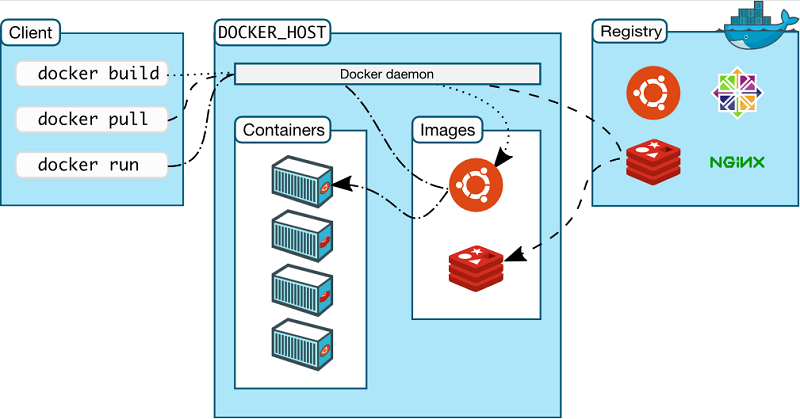

You have heard about it for sure, it’s one of the hottest technologies of the moment and it’s gaining momentum quickly: the numbers illustrated at DockerConf 2017 are about 14 million Docker hosts, 900 thousands apps, 3300 project contributors, 170 thousands community members and 12 billion images downloaded.

In this series of articles we’d like to introduce the basic concepts in Docker, so to have solid basis before exploring the ample related ecosystem.

The Docker project was born as an internal dotCloud project, a PaaS company, and based on the LXC container runtime. It was introduced to the world in 2013 with an historic demo at PyCon, then released as an open-source project. The following year the support to LXC ceased as its development was slow and not at pace with Docker; Docker started to develop libcontainer (then runc), completely written in Go, with better performances and an improved security level and degree of isolation (between containers). Then it has been a crescendo of sponsorships, investments and general interest that elevated Docker to a de-facto standard.

It’s part of the Open Container Project Foundation, a foundation of the Linux Foundation that regulates the open standards of the container world and includes members like AT&T, AWS, DELL EMC, Cisco, IBM Intel and the likes.

Docker is based on a client-server architecture; the client communicates with the dockerd daemon which generates, runs and distributes containers. They can run on the same host or on different systems, in this case the client communicates with the daemon by means of REST APIs, Unix socket or network interface. A registry contains images; Docker Hub is a public Cloud registry, Docker Registry is a private, on-premises registry.

Read more ...

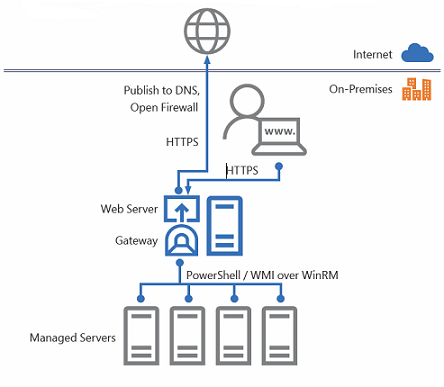

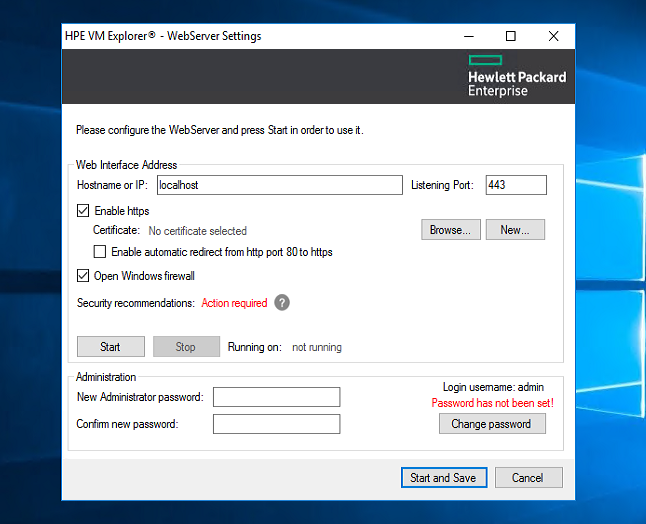

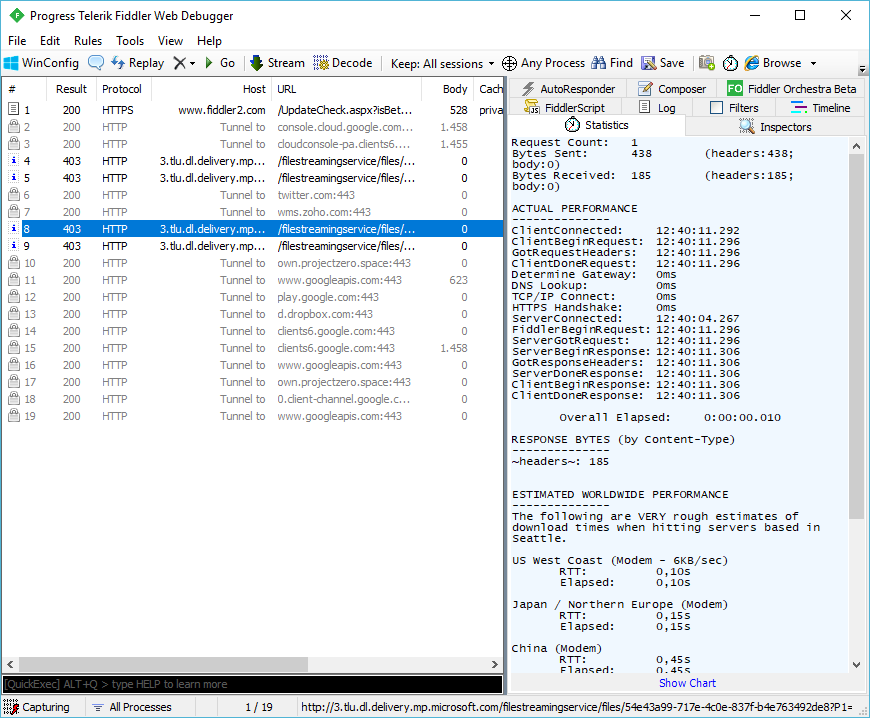

Announced last 14th September with a TechNet blog post, Project Honolulu is the free, Web-based HTML5 platform for centralized management of hosts and clusters that allows to control, manage and troubleshoot Windows Server environments from a single panel. Today is available as a “technical preview”.

Typically, the administration Windows server environments relies upon MMC (Microsoft Management Console) and other graphic tools, in addition to PowerShell, which guarantees a powerful and complete scripting system capable of an high level of automation.

Project Honolulu can be compared to VMware vCenter’s Web Client (albeit some differences), is a centralized management solution for Windows Server hosts and clusters, conceived not as a replacement for System Center and the Operations Management Suite, but as a complementary tool.

Projet Honolulu is the natural evolution of Server Management Tools (SMT is the analog tools retired a few months ago because as it ran on Azure, it required a constant Internet connection that sometimes it can’t be guaranteed) and represents its local, on-prem version. It’s not a substitute for MMC.

During last Ignite, Microsoft introduced the project with two demo sessions (one and the other), and covered the topic with a blog post.

Read more ...